Blog

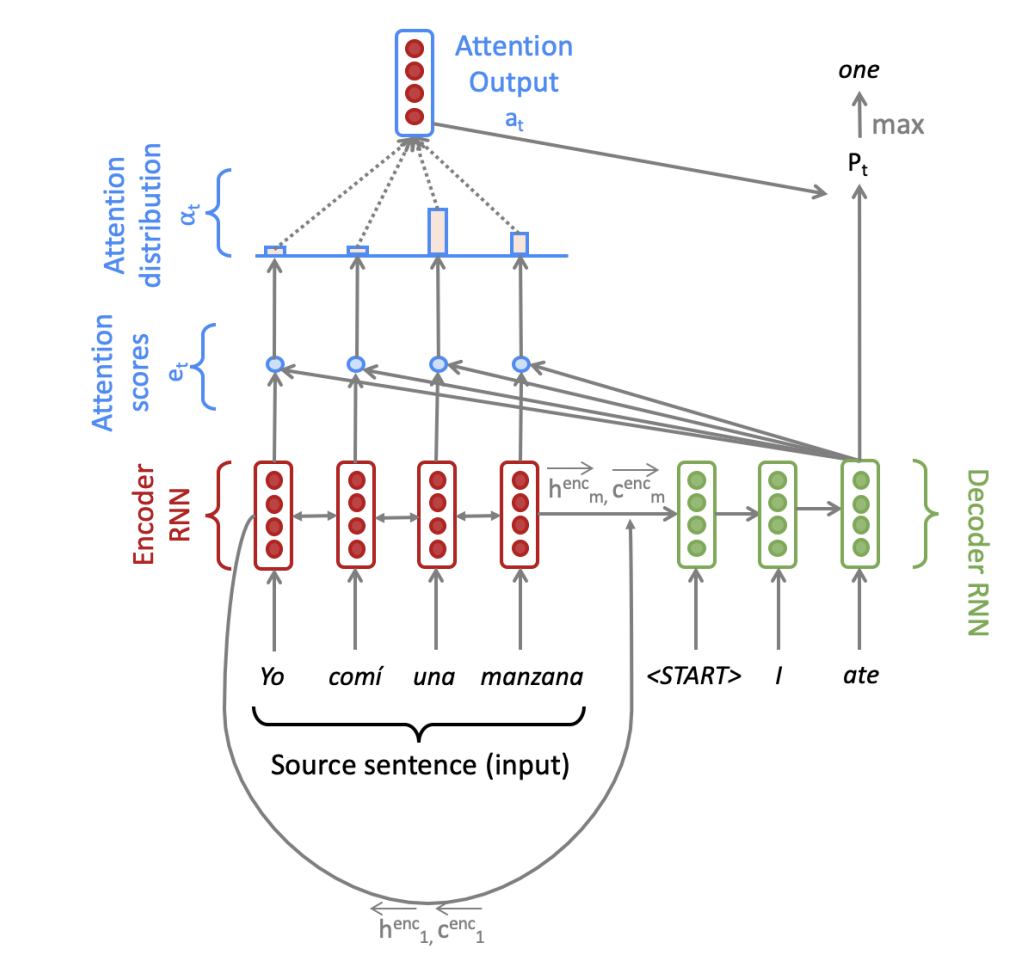

A huge portion of the knowledge in the world is represented and stored through text. Thus a huge part of being able to learn to understand the world comes from being able to understand, generate and process these textual transfers of information. With this lens in mind, very excited to have taken Stanford’s NLP and NLU courses and to experiment on things myself. These articles aim to cover topics in improving our understanding of natural language!

These articles cover topics in EE126: Probability and Random Processes which I took Spring 2018 under Professor Kannan Ramachandran. Over the last two years I’ve been very fortunate to work in the BAIR research lab - these problems are inspired from my time there!

This covers NLP blog posts and papers I’ve worked on in the recent past. My general area of interest lies in multi document summarization and fact based text generation and retrieval.