Blog

A huge portion of the knowledge in the world is represented and stored through text. Thus a huge part of being able to learn to understand the world comes from being able to understand, generate and process these textual transfers of information. With this lens in mind, very excited to have taken Stanford's NLP and NLU courses and to experiment on things myself. These articles aim to cover topics in improving our understanding of natural language!

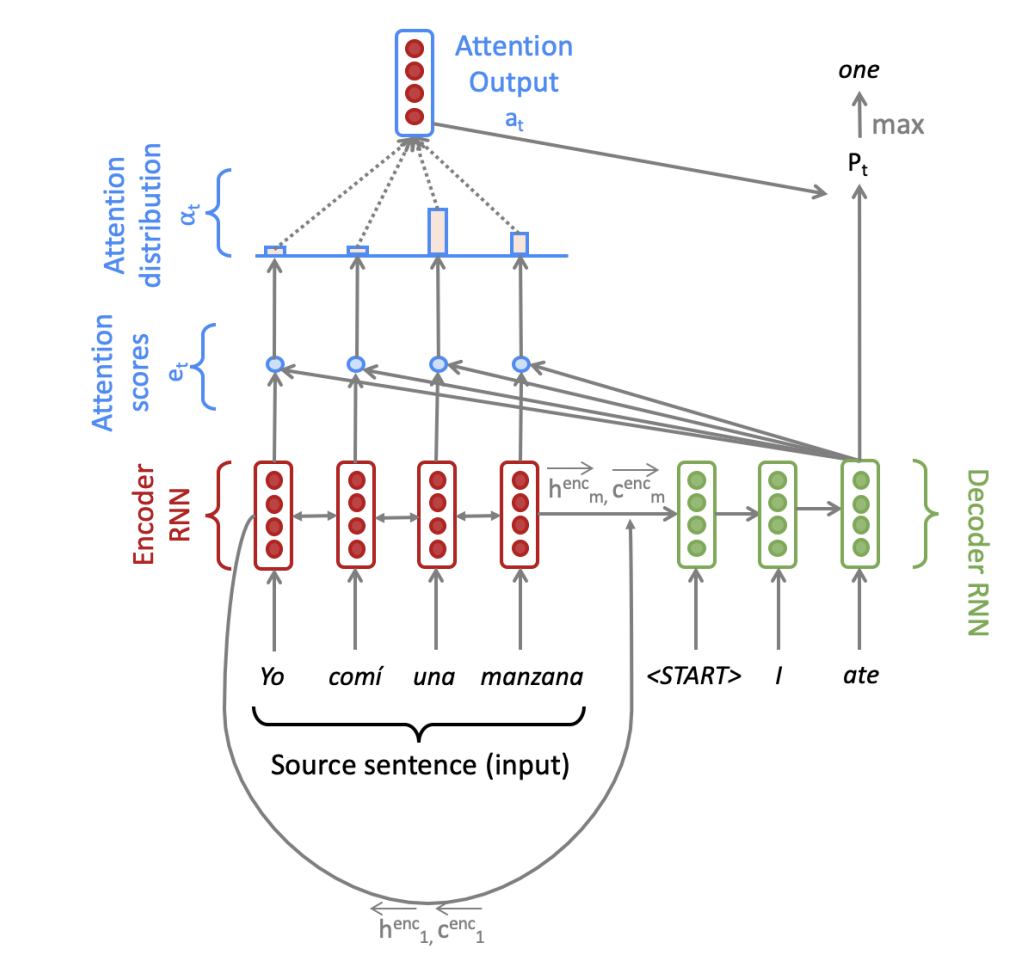

Neural Machine Translation

How can you train a baseline model to perform language translation? One of the primary tasks of natural language processing that I first interacted with was Google Translate, a machine translation model that converts text in one language to another. What seemed insanely arcane to me at first has actually gotten to the point where one can now setup and train a model to do this from scratch. Let’s try to build it up from a set of input sentences in one language to output sentences in another.

February 12, 2021